A comprehensive guide to parallel video decoding

August 26, 2011 16 Comments

As promised, today we’ll talk about video decoding. We will review the most important operations that a decoder has to fulfill, and for each case see what kind of speed boost we can expect with a shader based video decoding. Because video decoding is a complex process and one blog post can hardly be thorough, I’ll provide related links for each chapter, if you wish to start your own research on a particular subject 😉

A little bit of background is needed before going further.

1/ Colors coding

http://en.wikipedia.org/wiki/Rgb

http://en.wikipedia.org/wiki/Ycbcr

http://en.wikipedia.org/wiki/Chroma_subsampling

Colors are usually represented in the 24 bits RGB scheme (8 bits for each of the 3 components). It is the “native format” for most of the screens and most importantly for the human eye.

But when it comes to video compression, a different scheme is used. It’s called YCbCr, and you may know it as “YUV”. It is not a different colorspace than RGB but rather a different way to write it. The fact is that the human eye can see higher difference of luminosity than difference of colors. As for a RGB pixel, a YCbCr pixel has 3 components : Y is the “luma” component, Cb and Cr the “chroma” blue and red. The whole components of the same type of a picture are call a plane. The trick is to store the two chroma components at quarter resolution and the luma component at full resolution. This is called “chroma sub-sampling”, and the VP8 codec only support “4:2:0” mode.

So with a 4:2:0 sub-sampling, we have an overall of 50% of the components removed, for a drop of quality almost imperceptible !

2/ Block based coding

Most of the computation that we are going to study don’t work on the whole picture, but instead the video encoders divide the picture in smaller block of data, easier and especially faster to process.

First we have the “macroblocks” which are 16×16 pixels wide, used by the inter-prediction process. Then we have the “blocks” of 4×4 pixels, used by the intra-prediction process. Each macroblock includes 16 4×4 blocks of luma components, and 32 2×2 blocks of chroma components (8 for chroma blue, 8 for chroma red).

In this post I’ll mostly talk of the treatments applied on the 4×4 luma blocks in order to save time, not going too deep on the details.

3/ Entropy decoding

http://en.wikipedia.org/wiki/Entropy_encoding

http://en.wikipedia.org/wiki/Arithmetic_coding

The first process in “decoding order” is the entropy decoder. Lets paraphrase wikipedia :

In information theory an entropy encoding is a lossless data compression scheme that is independent of the specific characteristics of the medium.

One of the main types of entropy coding creates and assigns a unique prefix-free code to each unique symbol that occurs in the input. These entropy encoders then compress data by replacing each fixed-length input symbol by the corresponding variable-length prefix-free output codeword. The length of each codeword is approximately proportional to the negative logarithm of the probability. Therefore, the most common symbols use the shortest codes.

The only thing we need to known, is that entropy decoding is a very linear process and cannot be parallelized. The symbols are decompressed one by one (the bitstream is processed bit by bit in the case of arithmetic coding, i/o are bufferized however) and each symbol decoded updates the probability context of the decoder. Loose one bit, loose one frame.

Conclusion ? The entropy decoding stays in the CPU. What we need to do though is to decode the bitstream content for one frame in one pass, and not to start other processes as soon as enough data are available. That way we will have all the data to start other processes in parallel.

4/ Inverse transformation

http://en.wikipedia.org/wiki/Discrete_cosine_transform

http://en.wikipedia.org/wiki/Walsh-Hadamard_transform

The DCT, initials for Discrete Cosine Transform, is the operation at the heart of today video compression formats. The DCT is a reversible and lossless operation, meaning that the encoder and the decoder do the exact same thing, and that it does not directly help data compression, but just reorganize the coefficients of a block by frequency order, with the high frequencies at the beginning of the block, and the low frequencies at the end.

Note that the first coefficient of the block is called the “DC” coefficient, and represents the average of all the values of the coefficients of the block. The other coefficients are called “AC”.

(original picture – original coefficients – transformed coefficients – “zigzag scan pattern”)

The VP8 codec uses a 2d DCT-II on AC coefficients of each 4×4 block of luma pixel in frame.

The DC coefficients are then bundled by groups of 16 (so each DC coefficient of all 16 4×4 blocks of a macroblock) and applied with a secondary WHT transform.

As the DCT and WHT transformations are basically matrix calculus, they does fit well inside shaders. Plus, the blocks of data to process are independent upon each other, which makes parallelization easy. The same DCT is applied to all of the blocks of the picture. But there is a major drawback that we will saw in chapter 6.

5/ Inverse quantization

http://en.wikipedia.org/wiki/Quantization_(image_processing)

The quantization stage reduces the amount of information by dividing each transformed blocks of coefficient (a 2d matrix) by a “quantization matrix” and then by a “quantization parameter (used to adjust the final quality of the block) to reduce the quantity of possible values that value could have. All near zero values are zeroed.

Because this makes the values fall into a narrower range, this allows entropy coding to express the values more compactly.

(transformed coefficients – transformed & quantized coefficients- “zigzag scan pattern”)

Here is a quick overview of the quantity of data in a block before and after transformation + quantization :

A simple way to gain a lot of speed using shaders is to process several 4×4 blocks of data simultaneously as there is no dependency between blocks (in the limits of available shader units), and to process 4 data at a time inside each block.

6/ Intra-prediction

www.vcodex.com/files/h264_intrapred.pdf

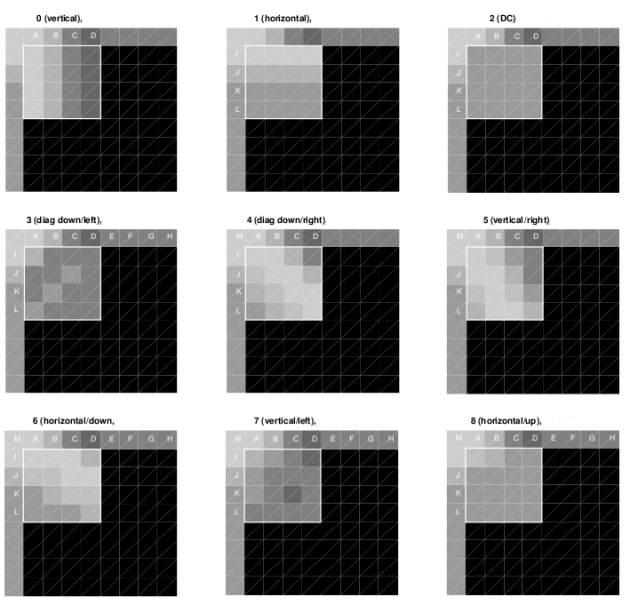

Intra-predictions use spatial redundancy inside a frame to reduce the amount of data necessary to encode adjacent blocks. It is a major compression feature, able to greatly reduce the size of a single picture. VP8 has 10 available intra-prediction schemes, which consist in copying the edge of the adjacent block(s) through another block, as seen in the following picture :

The major problem of the intra-prediction is that it reads the edges of adjacent reconstructed blocks. To reconstruct a block, the transformation, quantization and intra-prediction must have been performed on that block.

As the intra-prediction is in the middle of the operations needed to reconstruct a block, it mean that we cannot process these blocks in parallel, because each block can depend on the left, up-left, up-right block.

So basically each time we want to intra-predict a block we have to wait for the result of the previous intra-prediction process. It means that it suppresses the gain of doing parallel block transformation + quantization, because it would need to wait for the intra-prediction results to reconstruct the blocks anyway.

In a CPU implementation we do things like that :

– for each macroblock of a picture

* entropy decode a macroblock

– for each 16 blocks of the macroblock

* entropy decode a block

* transformation

* quantization

* intra-prediction

* block reconstruction

Once a set of data is loaded, it is better to perform all of the operations on that set while the data are still “hot” into the CPU cache, avoiding cache miss and expensive memory reads.

In a GPU implementation, we would try to do that :

- entropy decode everything in a frame

- upload data to the GPU memory

- transform all the blocks (in parallel)

- quantifiy all the blocks (in parallel)

- intra-predict all the blocks

- reconstruct all the blocks (in parallel)

So what to do with the intra-prediction stage ?

When you start several intra-predictions processes at different places of the picture, ignoring the dependency between block is not an option. Do one mistake during the decoding, and that mistake will propagate itself :

One another obstacle is that as each block uses a different intra-prediction scheme, we would need to setup shader programs for each scheme. Each of these shader programs must have a list of blocks it can handle, and they would have to wait for the availability of the reconstructed adjacent blocks of these inside the list to perform their task. But doing so (meaning doing linear processing using a parallel processor) could be counter productive performance wise.

The other solution would be to download transformed and quantified data (after parallel processing) to the main memory, do the intra-prediction in the CPU and re-upload the data for the reconstruction stage and further processing.

So far, my decision is to leave all the treatments before block reconstruction to the CPU, and concentrate on the parallelization of heavier treatments. The SIMD CPU optimizations (MMX, SSE…) will be reintroduced.

7/ Inter-prediction (motion compensation)

http://en.wikipedia.org/wiki/Motion_compensation

The inter-prediction, or motion compensation, is a practical way to use the temporal redundancy between frames. As two frames which follow each other have a great chance to be very similar, each macroblock of the picture can be just copied into another picture instead of being recoded. If needed, the copied macroblock can be slightly moved and interpolated to match the destination picture. This process can be quite intensive, up to 70% of CPU time, depending on the amount of inter-predicted blocks in the frame of course.

The VP8 specification allows up to 3 frames to be used as reference.

If the reference frames are already loaded into the GPU memory, recomposing a frame by copying macroblocks between GPU textures is faster than doing the same thing across CPU memory.

One other advantage is that sometimes an interpolation filter must be applied to the block of texture copied, and texture filtering is one thing a GPU do fast, or at least faster than a CPU.

8/ Loop filter

http://en.wikipedia.org/wiki/Deblocking_filter_(video)

As we talked about on this post, most of the processing during video decoding are done on small blocks of data. One side effect is that differences can appear between blocks when they are compressed with slightly different parameters.

To counter this “blocking effect”, a “loop filter”, or “deblocking filter” must be applied on the final picture. This filter is usually applied as a post processing filter for low bitrate videos.

In VP8 as in the H.264, the loop filter is a mandatory feature, allowing the encoder to lower the bitrate by 10%, regained in quality by applying the loop filter in both encoding and decoding paths. The only problem is that the loop filter is the most expensive operation of the VP8 decoding process, from 30% and up to 60% of the total decoding time, especially with high definition videos.

VP8 has two filter modes, a low complexity “simple filter” and a high complexity “normal filter” (used in almost every video). These two modes can be adjusted on a frame basis. The filter is somewhat adaptive, as some filtering parameter can be adjusted for each macroblock.

The goal is to smooth the rough edges between each block of the three planes (Y, Cb and Cr) for the normal filter, and only the Y planes for the simple filter. Depending if we are on a block or macroblock boundaries the process is not exactly the same, as 16×16 macroblock can pack parameters specific to a group of 16 of 4×4 blocks, there can be bigger differences at these macroblocks edges, so adjacent blocks edges within a macroblock may be concatenated and processed at once in their entirety.

- left macroblock edge (16 pixels wide)

- each left edge (4 pixels wide) for every 16 blocks inside the macroblock

- top marcoblock edge (16 pixels wide)

- each top edge (4 pixels wide) for every 16 blocks inside the macroblock

The deblocking process reads and then writes to the same values. But the shader programs usually input and output to different textures. The idea would be to create a new texture to output only delta values for each pixel, and then to compose the “delta texture” to the “frame texture”.

This VP8 deblocking process is linear, each macroblock must be fully processed before staring the next one. In order to parallelize it, it should be safe to start several deblocking process at different macroblocks (chosen depending on their filter strength) at the expense of some particular edges left unfiltered.

9/ Color space conversion

http://www.itu.int/rec/R-REC-BT.601-7-201103-I/en

Color space conversion is the last process in “decoding order”. As we’ve seen in chapter 1, the video formats don’t store their pixels in RGB, but in subsampled YCbCr format.

To convert a pixel between the two color models, the VP8 uses the ITU recommendation 601, which defines among some other things how to convert an analog RGB signal to a digital YCbCr signal. From there an equation to convert digital YCbCr to digital RGB can be extrapolated :

Where R, G and B are the 3 components of a 24 bits RGB color space, and Y, Cb and Cr are the 3 components of a 24 bits YCbCr color space. The prime denotes a gamma-corrected component.

The YCbCr to RGB conversion can be intense on the CPU, for each pixel, 9 divisions, 13 multiplications, 11 additions/subtractions. With some rounding and factorisation we can find a faster implementation, with still 7 multiplications, 7 bitshift and 7 additions/subtractions :

Once again, to do that operation faster in a shader unit, convert 4 components at a time, and start simultaneously several conversion processes at different places of the frame.

10/ Conclusion

Video codecs are not conceived with performance in mind, as the primary goal is to save a lot of data for transmission. Video decoding is not really a “parallel job”. Some operations are by nature linear, and their handling by a GPU have to be carefully thought. The raw power of a GPU is only the addition of the raw power of its many computing units. When some operations cannot be parallelized, a GPU will end up being slower than a CPU for the same task.

The hard thing when it comes to GPU decoding is to properly identify which parts of the decoding can be parallelized and wich parts cannot. The key aspect is to do the maximum of operations inside the GPU memory, with shader programs working directly in that memory, and to try to have as little interaction as possible between CPU and GPU. Best case scenario, a shader outputs its results directly to another shader inputs. Uploading data to the GPU memory or scheduling shader programs introduces some latency which could became a net performance penalty in the decoding if done too frequently.

If you have any question regarding the content of this post, ask these in the comments and I’ll do my best to answer it.

11/ Summer of Code conclusion

As you may know the Google Summer of Code 2011 ends today.

I did not finish my project and only completed half of it. The “client side” of the VP8 VDPAU implementation is working and is currently being reviewed by the libvdpau maintainers. The mplayer/mplayer2 and ffmpeg/libav patches are also in shape and depending on the feedback on the libvdpau patch they will be sent upstream for review.

The Gallium3D decoder implementation inside Mesa is up and running, tested on r600g and softpipe drivers, but is only a slimmed down port of the libvpx decoder. Currently the only hardware accelerated part is the color space conversion stage, mainly because of the work of Younes Manton and Christian König on the G3DVL interface and the VDPAU state tracker.

My work here is not done, and the ideas expressed in this post have yet to be concretized. I still have some free time, and as I start working late on this project it’s only fair that I dedicate this time to move forward with the decoder implementation.

I’d like to thanks every one that has been involved in this project, starting with my mentor Jakob Bornecrantz who has been very patient with all my questions these past months, Younes Manton and Chrisitan Konïg who have been working on the current G3DVL interface and video decoders, Matt Dew for helping GSoC students since day one, and thanks to everyone else !

Special thanks to Chrisitan Konïg and AMD for providing hardware for the project !

Finally a big thanks to Google, to give students around the world an awesome learning opportunity with the Summer of Code program, and a great way of starting working inside a free software community.

Excellent writeup, a very interesting read. Thanks for your work this summer.

Thank you !

It seems like this post is almost a great introduction for newcomers to modern video codecs. One quibble:

“Video codecs are not conceived with performance in mind”

is not right. The designers of VP8 (and every other codec) were constantly striving for optimal CPU performance, in conjunction with their other goals. They could certainly have improved compression quality by using more CPU time, but they chose not to.

You might instead accurately say that VP8, and most other modern video codecs, are not designed for highly parallel decoding.

You are right, some codecs may have been designed with greater speed concerns than others, and none of them have been designed for highly parallel decoding.

But in the case of VP8, the loop filter and some interpolation technics are overcomplicated, and I don’t know if these decisions made sense if the goal really was to make a fast codec, because you would not want to use 50% more CPU time to save only 15% of data. Other solutions where available.

The other example is H.264, where a lot of (great) features like CABAC are here to squeeze out every single bit of compression possible, regardless of the performance impact or overall codec complexity.

Could optimistically parallelise intra-prediction decoding and detect errors if any happen?

e.g. decode [1][2][3] in parallel with [4][5][6], and then check if edge after [3] was the same you assumed when starting with [4]. If not, redo it. if yes, you’ve got performance win!

Yes it should be possible, but then we would have to guess the values (each between 0 and 255) of :

– at best, one edge, 4 pixels

– at worst, three edges and a corner, 13 pixels

And that is definitly hard to do.

Hey, great work so far! I actually independently started working on a GPU VP8 decoder (at the OpenCL+GL level though) in April, you may be interested in the thoughts I wrote up in this HN comment: http://news.ycombinator.com/item?id=2949394

Very useful comment indeed thank you, I hope you don’t mind if I answer here.

I took the same the same approach than you, start at the top and replace CPU operations by GPU ones. Color space convertions are done and I am working on the loop filter, and I’ll finish by inter-predictions. That should be enough to beat a purely CPU based decoder performance wise.

For the intra-predictions it might be easier to do the ones in the middle of inter-predicted pictures, the dependencies on adjacent blocks could be easily solved if these blocks are inter-predicted and thus already available, and otherwise it should be at least easier to find new and independant entry points to start more intra-prediction processes in parallel.

For the loop filter your idea is great I was looking to do something similar. Plus, for the normal loop filter we can do the 3 planes at the same time, so with a 4:2:0 subsampling we can complete both chroma planes in less than half the amount of time required to do the luma plane.

Yeah, I think for intra prediction it will be necessary to create a map of the dependencies of all the blocks that need predicting for each frame, and to schedule the GPU tasks accordingly. Sticking with P-frames for now is probably a good plan.

Running the chroma and luma tasks in parallel can be done for all stages of the pipeline, although presumably only the loop filter can be run in U+V lock-step.

Sorry for the mundane question, but which graphing software is used in blockcoeff.png? I’ve been looking for something just like it.

Really great overview of the decoding process in general, even if read without parallelization in mind!

Thanks !

For blockcoeff.png, I actually made this one with libreoffice calc 😉 It’s just a 3d graph using 4 series of data. I emailed you my template, hope that helps.

Pingback: Stuff The Internet Says On Scalability For September 9, 2011 | Krantenkoppen Tech

Pingback: High Scalability - High Scalability - Stuff The Internet Says On Scalability For September 9, 2011

Pingback: | Blog | Stuff The Internet Says On Scalability For September 9, 2011

Pingback: Developing A Shader-Based Video Codec | Breaking Eggs And Making Omelettes

Marvelous!